GREEN DIVERGENCE

This has been an issue from the start.

Most algorithms assume G1 and G2 in the Bayer layout are more or less the same value, or at least hold a correct value. In practice this is not the case. The divergence between G1 and G2 is a result of influence from the other channels (red or blue). This was very apparent on the Leica M8 and M9, where all the algorithms produce highly visible maze patterns in areas where red is dominant. One of the greens on the CCD of these Leicas is clearly influenced by what's happening in the red channel. The M8 is more sensitive to this problem than the M9, but both are problematic. It does seem to be mostly a problem on CCD sensors and older cameras. The more recent Leica CMOS cameras (and other brands like Canon, Nikon and Sony) are less prone to the problem.

By now I've improved the algorithm that tackles this problem. The easiest solution would have been to balance the greens out. Most algorithms probably do this up front. I didn't want to do that. Keep the greens as close to 50% as possible and not simply eradicate 25% of it, when they're only an issue in red areas. Apart from trying to concentrate on specific areas of the photo, I also wanted some variable weight in it, because this problem is not only dependent on the camera used, but also on the algorithm used.

The problem here is that it's hard to find the right amount of correction, since it's unclear per sensor under what conditions the greens diverge most or where in the photo the algorithm suffers most from the divergence (shadows, highlights, mid tones, certain colors etc). I can't blame commercial implementations overdoing this, just to be on the safe side. It's impossible to inspect every sensor and to come up with a workable solution that works perfectly on all sensors. The alternative (my approach) is to leave it partly to the user. Which is dangerous, since you might never read any of this or just gloss over the issue, introducing patterns in your photos.

Well, at least I tried...

So the new DNGMonochrome contains a special module, where this stuff can be set and changed per camera and per algorithm. It's set already by me to some defaults I think are okay for some cameras. But since my testing is limited to only a few photos per camera, I can't fully judge every situation in which this problem might show.

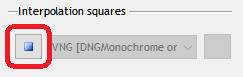

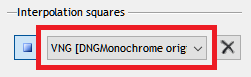

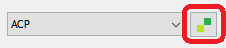

You can change the correction in the 'Green divergence' window, through this button. On the left side of the program, at the bottom.

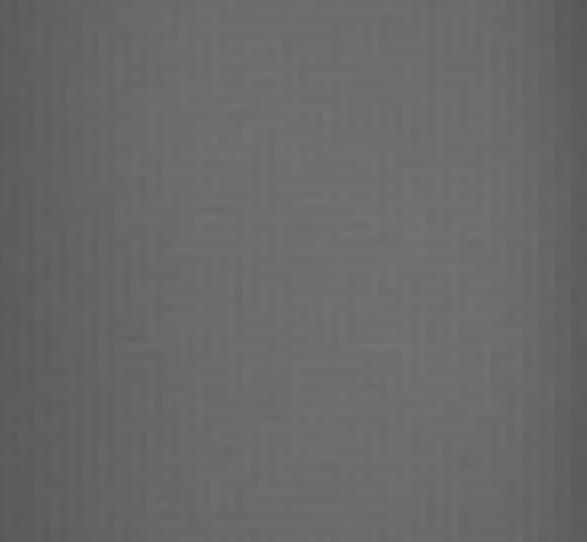

However, the correction itself can also have adverse effects. Move the sliders to a higher setting only if you notice patterns as

shown in the following image, usually in originally red areas (you will have to pixel peep and zoom to 400% - 800% to notice it). Keep the slider as low as possible. I do urge you to check for this problem on any photos with obviously red patches in it. Once you found the right setting, following photos you convert will behave accordingly.

800% magnification of a red patch, interpolated with the scrapped AHD uncorrected (Leica M9)... note that other algorithms might show slightly different patterns, but as obvious...

800% magnification of the same red patch as above, interpolated with the scrapped AHD, after correcting with the slider for green divergence (Leica M9)...

If you do not notice any problems, leave the sliders to the default setting or experiment with sliding them to lower and possibly to 'off' (run a new conversion after changing any of these settings, and check the result again...).

Correction is definitely needed on the Leica M8, Leica M9 and Leica S (typ 006). The sliders

are already preset for those cameras (and for some others). On most cameras

the compensation is set to a very conservative default, focusing on

red overspill. Turning them off might work or you may need to increase the value.

The color options (the check boxes 'red areas' and 'blue areas') are used to compensate in areas that are either predominantly

red or predominantly blue (or both) and turning them on (or one of them) avoids compensating in

areas that don't need it. I've only seen this problem pop up in red areas (except on the Leica M8), so the 'blue areas' seems redundant and can probably be left set to 'off'. But again: I don't know how every sensor behaves... might come in handy. Note that if you change the color options, you might have to adjust the sliders.

Most algorithms assume G1 and G2 in the Bayer layout are more or less the same value, or at least hold a correct value. In practice this is not the case. The divergence between G1 and G2 is a result of influence from the other channels (red or blue). This was very apparent on the Leica M8 and M9, where all the algorithms produce highly visible maze patterns in areas where red is dominant. One of the greens on the CCD of these Leicas is clearly influenced by what's happening in the red channel. The M8 is more sensitive to this problem than the M9, but both are problematic. It does seem to be mostly a problem on CCD sensors and older cameras. The more recent Leica CMOS cameras (and other brands like Canon, Nikon and Sony) are less prone to the problem.

By now I've improved the algorithm that tackles this problem. The easiest solution would have been to balance the greens out. Most algorithms probably do this up front. I didn't want to do that. Keep the greens as close to 50% as possible and not simply eradicate 25% of it, when they're only an issue in red areas. Apart from trying to concentrate on specific areas of the photo, I also wanted some variable weight in it, because this problem is not only dependent on the camera used, but also on the algorithm used.

The problem here is that it's hard to find the right amount of correction, since it's unclear per sensor under what conditions the greens diverge most or where in the photo the algorithm suffers most from the divergence (shadows, highlights, mid tones, certain colors etc). I can't blame commercial implementations overdoing this, just to be on the safe side. It's impossible to inspect every sensor and to come up with a workable solution that works perfectly on all sensors. The alternative (my approach) is to leave it partly to the user. Which is dangerous, since you might never read any of this or just gloss over the issue, introducing patterns in your photos.

Well, at least I tried...

So the new DNGMonochrome contains a special module, where this stuff can be set and changed per camera and per algorithm. It's set already by me to some defaults I think are okay for some cameras. But since my testing is limited to only a few photos per camera, I can't fully judge every situation in which this problem might show.

You can change the correction in the 'Green divergence' window, through this button. On the left side of the program, at the bottom.

However, the correction itself can also have adverse effects. Move the sliders to a higher setting only if you notice patterns as shown in the following image, usually in originally red areas (you will have to pixel peep and zoom to 400% - 800% to notice it). Keep the slider as low as possible. I do urge you to check for this problem on any photos with obviously red patches in it. Once you found the right setting, following photos you convert will behave accordingly.

800% magnification of a red patch, interpolated with the scrapped AHD uncorrected (Leica M9)... note that other algorithms might show slightly different patterns, but as obvious...

800% magnification of the same red patch as above, interpolated with the scrapped AHD, after correcting with the slider for green divergence (Leica M9)...

If you do not notice any problems, leave the sliders to the default setting or experiment with sliding them to lower and possibly to 'off' (run a new conversion after changing any of these settings, and check the result again...).

Correction is definitely needed on the Leica M8, Leica M9 and Leica S (typ 006). The sliders are already preset for those cameras (and for some others). On most cameras the compensation is set to a very conservative default, focusing on red overspill. Turning them off might work or you may need to increase the value.

The color options (the check boxes 'red areas' and 'blue areas') are used to compensate in areas that are either predominantly red or predominantly blue (or both) and turning them on (or one of them) avoids compensating in areas that don't need it. I've only seen this problem pop up in red areas (except on the Leica M8), so the 'blue areas' seems redundant and can probably be left set to 'off'. But again: I don't know how every sensor behaves... might come in handy. Note that if you change the color options, you might have to adjust the sliders.